In the last decade bots and algorithms have imbued themselves into society, becoming essential parts of our lives. They’re everywhere; deciding what we should watch next on Netflix, who the police should monitor, and what shares traders should offload. They’ve also quietly slipped into the journalism industry, changing the way we’re producing and consuming content.

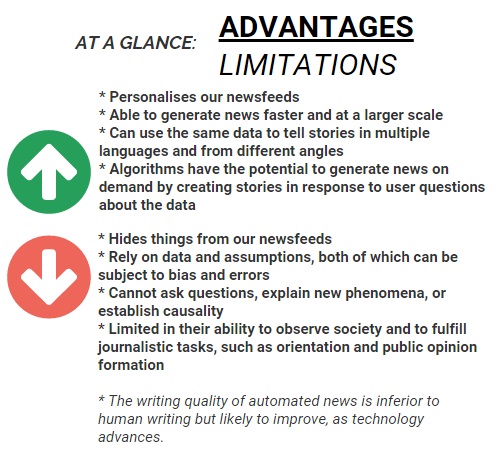

We’re on the precipice of a new digital era for journalism, one equal parts exciting and terrifying. The rise of computer software has continued to fuel fears robot content will eventually eliminate human jobs and overthrow the journalism industry as we know it. Al Jazeera ran a feature on the topic late last year, suggesting humans and algorithms will work with – not against – one another in the future. Computers are good with data, though human creativity is not as easily replicated.

Robot journalism: the end of human reporters? – The Listening Post (Feature)

You may not have realised it, by some of the stories you have read may have been produced by robots.Financial reports, sports updates and earthquake alerts a…

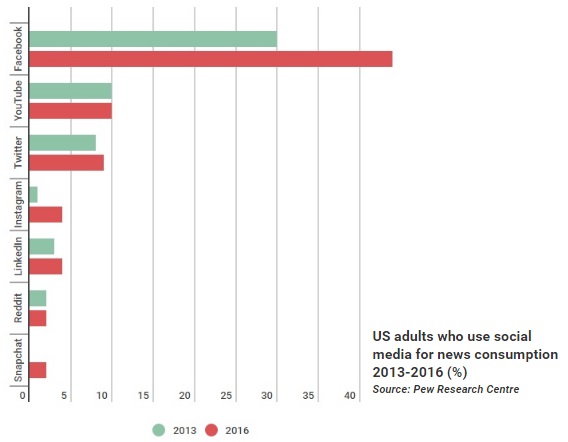

As concerning as these algorithms could still prove, it is a less-obvious kind posing an arguably bigger threat: those on social media sites. As incessant internet usage becomes habitual and gradually necessary, people are heading to social media networks such as YouTube or Facebook – the latter of which boasts user numbers of roughly one-fifth of the world’s population per month – to get their news.

Social media, fast becoming a middle-man between story and public, is unlike traditional news channels. It has different conditions for use, and remains more nuanced than its hyper-convenience has us believe. While algorithms on social media sites are giving us unprecedented control over what posts we see, they also hide a lot of them, subtly skewing our points of view. Echo chambers and filter bubbles are not a new phenomenon, though they remain relatively undefined in our new digital age.

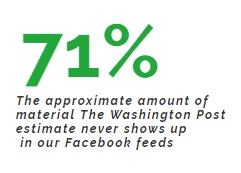

Most would be aware personalized newsfeeds don’t show us every piece of content, but remain perhaps a little ignorant to the extent of which filtering occurs. The Washington Post estimate  around 71 percent of material never even shows up in our Facebook feeds, thanks to algorithms. Facebook isn’t the only social media giant using algorithms – Instagram introduced one earlier this year, while Twitter have experimented with their “While you were away” section – though it is the most widely used for the consumption of journalism.

around 71 percent of material never even shows up in our Facebook feeds, thanks to algorithms. Facebook isn’t the only social media giant using algorithms – Instagram introduced one earlier this year, while Twitter have experimented with their “While you were away” section – though it is the most widely used for the consumption of journalism.

There’s still major misconception around how newsfeeds work, with a recent study from the University of Illinois finding 62.5 percent of participants had no idea Facebook vetted posts at all. This out-of-sight-out-of-mind paradigm is not always a problem, especially in small doses. For casual users Facebook’s reduction of “unwanted” content and noise can even be helpful, a problem a lot of people incidentally have with Twitter and Reddit. But a problem arises when people exclusively use their newsfeed for more and more significant things, like informing how they vote and make decisions.

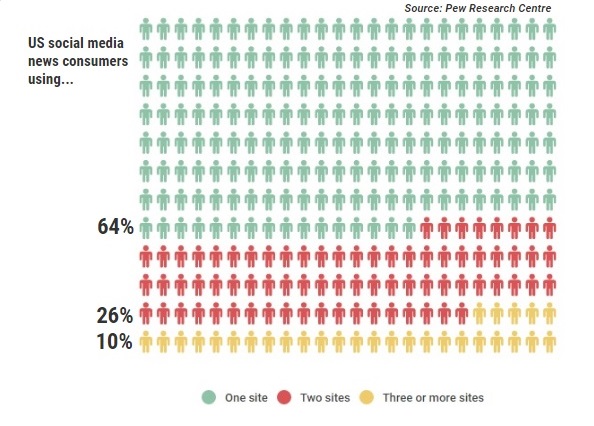

Pew Research Centre earlier this year found 64 percent of Americans who use social media, get their news from only one site. Even more unsettling, Gizmodo revealed in May a number of Facebook workers regularly screened stories of interest to right-wing readers from their trending section. If people are increasingly getting their news from a singular source, and that source is only showing one side of the story, this obviously raises some real questions regarding democracy. More than half of all American adults use Facebook; enough people, according to some academics, to potentially swing a national election.

While at this stage little has been proven about the implications for democracy if algorithms are to become part the fourth estate, the journalism industry should be wary. Algorithms have everything journalists are trained to question: they’re complex, secret, and driving considerable parts of society. They’re guiding us toward a certain way of thinking, of being; so subtly, most of us aren’t aware it’s happening. To keep an eye on this is exactly why the fourth estate exists.

There are fixes of sorts at this point, though they’re limited and impractical at best. Sociologists have called for social media sites to offer the option of depersonalized newsfeeds; while Balancer, a browser extension in production aiming to increase “news diversity”, attempts to level the field. Until something more feasible comes along however, it’s likely any shift in the way we’re consuming news will come from outside the realm of technology.

Readers have been reacting to journalism since the beginning of the printing press. But now journalism is beginning to react to readers, and it’s raising a number of questions we need to consider.

– YouTube

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

Bots and algorithms are becoming key actors in our society. These increasingly authoritative pieces of code were created to be problem solvers, to efficiently complete tedious tasks. But without vigilance, they could morph into problem makers. When managed properly, algorithmic software can be a powerful tool for humans to use. But as it becomes more powerful and ubiquitous, it is imperative we understand each other and establish a positive relationship.

REFRENCES

Barot, T 2015, The Botification of News, Niemen Lab, viewed 1 August 2016, http://www.niemanlab.org/2015/12/the-botification-of-news/.

Benton, J 2016, Facebook is making its News Feed a little bit more about your friends and a little less about publishers, Niemen Lab, viewed 5 August 2016, http://www.niemanlab.org/2016/06/facebook-is-making-its-news-feed-a-little-bit-more-about-your-friends-and-a-little-less-about-publishers/.

Dewey, C 2012, If you use Facebook to get your news, please – for the love of democracy- read this first, The Washington Post, viewed 3 August 2016, https://www.washingtonpost.com/news/the-intersect/wp/2015/06/03/if-you-use-facebook-to-get-your-news-please-for-the-love-of-democracy-read-this-first/.

Graefe, A 2016, Guide to Automated Journalism, Tow Centre for Digital Journalism, viewed 6 August 2016, http://towcenter.org/research/guide-to-automated-journalism/.

Hardt, M 2014, How Big Data Is Unfair, Medium, viewed 5 August 2016, https://medium.com/@mrtz/how-big-data-is-unfair-9aa544d739de#.fffyqclcj.

Mullany, A 2015, Platforms Decide Who Gets Heard, viewed 1 August 2016, http://www.niemanlab.org/2015/12/platforms-decide-who-gets-heard/.

Nunez, M 2016, Former Facebook Workers: We Routinely Suppressed Conservative News, Gizmodo, viewed 3 September 2016, http://gizmodo.com/former-facebook-workers-we-routinely-suppressed-conser-1775461006.

Ravi, M 2015, Robot journalism: the end of human reporters?, Al Jazeera, viewed 6 August 2016, https://www.youtube.com/watch?v=ci-rHRJlFew.

Steiner, C 2012, Algorithms Are Taking Over The World, TEDx Talks, viewed 5 August 2016, https://www.youtube.com/watch?v=H_aLU-NOdHM.

Virdee, K 2015, When Recommendations Become News, Niemen Lab, viewed 1 August 2016, http://www.niemanlab.org/2015/12/when-recommendations-become-news/.